In recent years, headlines showcasing how AI technology is being incorporated into automotive software solutions have become increasingly common. The establishment of dedicated AI facilities (e.g. Izmo’s Automotive AI Factory, Qualcomm’s AI R&D Center) and collaborative initiatives regarding Automated Driving Assistance System (ADAS) development (e.g Bosch & Cariad, GM & NVIDA) are just a few examples of how the automotive sector is rapidly embedding AI across the vehicle lifecycle.

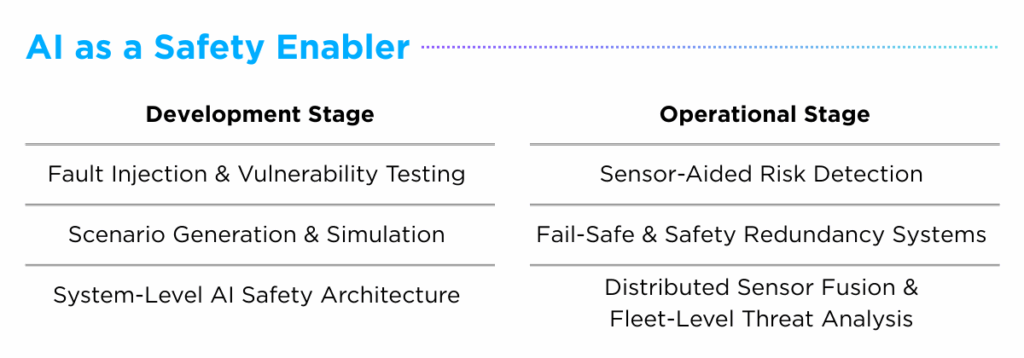

When it comes to automotive safety software, AI adoption has advanced along two simultaneous fronts. In one dimension, AI is positioned as a Safety Enabler, actively embedded in tools and solutions to strengthen resilience, detect risks and improve the reliability of vehicle platforms. From another perspective, AI is treated as a Safety-Critical Element, subject to rigorous standards and certifications to ensure that its deployment is trustworthy, robust and auditable.

This blog aims to explore these two complementary perspectives on AI in automotive safety — one driven by industry innovation and the other shaped by regulatory and standards-based assurance. Together, they illustrate how AI has evolved from a promising technology to a core component of both engineering practice and compliance frameworks.

AI as a Safety Enabler

Across both the development and operational stages, OEMs, Tier1 suppliers and cybersecurity firms are applying AI to augment safety functions — strengthening resilience through proactive risk detection, automated testing and system-wide awareness.

I. Development Stage

In the development stage, AI is increasingly used to validate safety-critical components by automating test generation and expanding scenario coverage.

Fault Injection and Vulnerability Testing

Traditional fuzzing relies on random or manually crafted test inputs, which can miss subtle flaws. AI-enabled fuzzing, by contrast, generates protocol-specific, context-aware test cases at scale, uncovering vulnerabilities more quickly and systematically. A representative example is the AutoCrypt CSTP Security Fuzzer Solution which leverages AI-generated inputs to probe in-vehicle communication protocols and expose weaknesses in ECUs, braking controllers and telematic units with greater depth and coverage.

Scenario Generation & Simulation

Another area where AI enhances safety is in the generation of synthetic, edge-case scenarios that supplement baseline test datasets. Addressing a key challenge of ADAS and AV validation surrounding reflection for rare, safety-critical scenarios, AI allows engineers to proactively evaluate system safety under unusual conditions. The Gatik Arena platform illustrates this approach, employing techniques such as NeRFs, 3D Gaussian splatting and diffusion models to create synthetic scenarios, which are then fed into a modular simulation engine for end-to-end validation.

System-Level AI Safety Architecture

Beyond individual tools, AI is also embedded into holistic safety frameworks that span the entire lifecycle of software-defined vehicles. These frameworks account for the multi-dimensional nature of automotive software, monitoring and validating AI performance from training to deployment. The NVIDIA AI Systems Inspection Lab highlights this application, offering a safety framework that integrates cloud-based training oversight, model inspection and in-vehicle runtime validation to ensure system-wide assurance.

II. Operational Stage

AI also plays a crucial role in maintaining and extending safety during vehicle operation, both at the individual and fleet level.

Sensor-Aided Risk Detection

Leveraging multi-modal data fusion, AI enables vehicles to analyze real-time inputs from tires, cameras, radar and LiDAR to identify conditions that could compromise safety. The collaboration between AEye and Blue–Band illustrates this approach: by combining AEye’s OPTIS™ autonomous system and Apollo long-range LiDAR with Blue–Band’s AI orchestration platform, the solution delivers real-time insights for traffic monitoring, incident detection, and adaptive road safety management.

Fail-Safe & Safety Redundancy Systems

Overcoming the limitations of traditional automotive systems which often fail to account for systemic decision-making errors, AI continuously interprets both the driving environment and system health to determine when fallback responses are necessary. The patent for Guident’s Remote Monitoring and Control Center (RMCC) represents this scenario: it’s AI-driven fusion system processes sensor data from multiple autonomous vehicles and can assume remote control when risk levels exceed predefined safety limits.

Distributed Sensor Fusion & Fleet-Level Threat Analysis

Reflecting the fact that safety hazards regarding environmental disruptions affect entire fleets, AI enables fleet-level data aggregation and threat analysis, transforming distributed sensor inputs into system-wide safety insights. NIRA Dynamic’s partnership with BANF demonstrates this with the integration of triaxial tire sensor data into fleet management systems, enabling large-scale hazard detection and broadcast-level warnings to improve fleet safety.

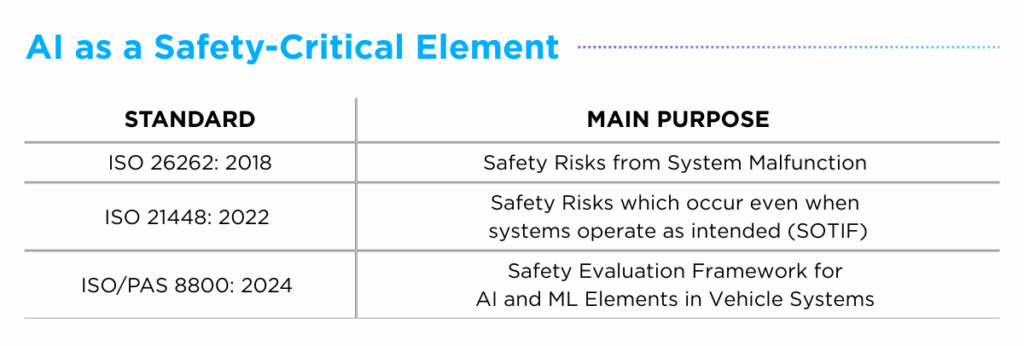

AI as a Safety-Critical Element

While AI enables safer and more resilient automotive systems, it is also recognized as a safety-critical element requiring rigorous evaluation to ensure trustworthiness. This perspective is reflected in a series of international standards: ISO 26262: 2018, ISO 21448: 2022 and ISO/PAS 8800: 2024.

I. ISO 26262: 2018 (Functional Safety)

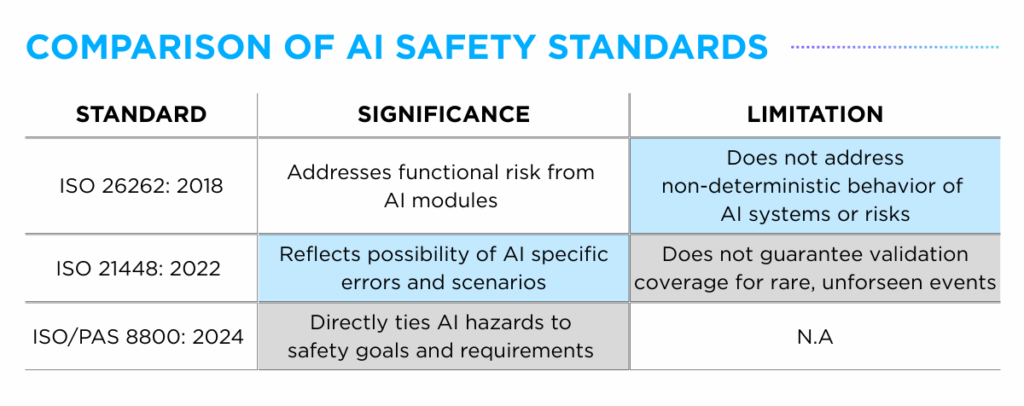

The ISO 26262 standard focuses on addressing hardware and software faults inside road vehicles that can lead to hazardous behavior. While it does not directly reference AI or ML, AI modules are implicitly covered as safety-related component that may fail due to defects in software implementation, hardware execution, or system integration.

The first connection appears in the definition of a “safety-related item” under Part 3. System & Item Definition. Any component which failure could lead to a hazard qualifies, and thus AI modules can be treated as such. Similarly, Part 3. System & Item Definition and Part 4. Hazard Analysis & Risk Assessment (HARA) define “hazards” as malfunctions requiring assignment of an Automotive Safety Integrity Level (ASIL). Under this framework, AI failures such as object misclassification or a neural network crash can be classified and addressed as safety hazards.

The standard also indirectly applies to AI within software and hardware development. For example, Part 5. Hardware Development requires diagnostic coverage and safety mechanisms for critical hardware faults. This extends to SoCs or accelerators running AI inference (e.g. GPUs, NPUs), which must be safeguarded to prevent silent failures that could compromise AI workflows.

While ISO 26262 provides a baseline framework for addressing AI malfunction scenarios, it falls short in covering the non-deterministic behavior of AI systems. These gaps have prompted the development of complementary standards — ISO 21448, ISO/PAS 8800 — to more fully address AI-related safety risks.

II. ISO 21448: 2022 (Safety of the intended functionality, SOTIF)

Whereas ISO 26262 focuses on risks from system malfunctions, ISO 21448 addresses situations where the system behaves as designed but still poses safety risks under certain conditions. As with ISO 26262, terms explicitly referencing AI or machine learning are absent. Nevertheless, the standard is widely recognized as highly relevant to AI-driven systems, which are especially sensitive to incomplete data, edge cases and unknown scenarios.

One key concept appears in Clause 11. Hazardous Scenarios, which introduces the distinction between “known hazards” (anticipated cases) and “unknown hazards” (unanticipated conditions). The latter is particularly relevant to AI, as machine learning models are prone to failure when exposed to out-of-distribution inputs. The standard emphasizes the need to achieve acceptable residual risk even in such unknown conditions.

Expanding beyond definitions, Clause 9. Verification and Validation stresses the importance of robust validations strategies that go beyond normal operating conditions. This is especially critical for AI/ML systems, as traditional deterministic testing methods cannot guarantee complete coverage of rare, long-tail scenarios.

By incorporating concepts of non-deterministic behavior and unquantifiable risks, ISO 21448 plays a crucial role in framing AI-related safety challenges in automotive systems. It highlights how limitations in AI perception and decision–making can result in unsafe outcomes. However, with methodologies for residual risk evaluation still relying on conventional statistical methods, there remain limitations in guaranteeing coverage for rare or unforeseen inputs.

III. ISO/PAS 8800: 2024 (Safety and artificial intelligence)

Building on the foundations of ISO 26262 and ISO 21448, ISO/PAS 8800 provides the first global assessment framework dedicated to systematically evaluating AI systems in road vehicles. The document explicitly states its intent to extend and adapt the principles of functional safety (ISO 26262) and SOTIF (ISO 21448) to AI and machine learning elements.

ISO/PAS 8800 raises AI-specific safety concerns directly, linking identified hazards to clear safety requirements and goals. It details procedures covering the entire lifecycle of AI systems including dataset quality management, model development and safe deployment practices. In addition, the standard also places emphasis on runtime monitoring and post-deployment governance, ensuring continuous oversight of AI performance.

Through this framework, ISO/PAS 8800 ensures that AI safety measures are embedded from the earliest stages of system design through post-deployment operation, closing gaps left by prior standards and providing a structured foundation for AI assurance in automotive systems.

Future Progress of AI in Automotive Safety

As illustrated in the previous sections, the automotive safety industry has approached AI from two contrasting angles: as a defense mechanism to strengthen safety levels, and as a potential risk factor requiring strict evaluation. Nevertheless, both perspectives converge on the same overarching goal — leveraging AI to improve resilience of automotive systems against internal flaws (i.e. software errors, model weakness) and external risks (i.e. environmental hazards, cyber threats).

Looking ahead, the progress of AI in vehicle systems will center on two parallel developments: advancing innovation in AI-driven safety tools and establishing rigorous compliance and certification frameworks. As this dual evolution unfolds, AUTOCRYPT is committed to playing a leading role in not only providing solutions that integrate AI to enhance safety and resilience but also by staying closely aligned with the evolving regulatory landscape that governs the safe deployment of AI-embedded vehicle systems.

Learn more about our products and solutions at https://autocrypt.io/all-products-and-offerings/.

![[AUTOCRYPT] CES 2026 Highlights](https://autocrypt.io/wp-content/uploads/2026/01/12_8-D-30_2-est-2.png)